This series started because of a 1–1 conversation with a reportee. One of my reportees asked me a simple question: “With everything happening in AI… should I be worried, and what will happen to jobs in 5-10 years?” It wasn’t a dramatic question. It wasn’t about headlines. It was about career, growth, and uncertainty. That … Continue reading Will Jobs Exist as we know?(The Fear)

Tag: llm

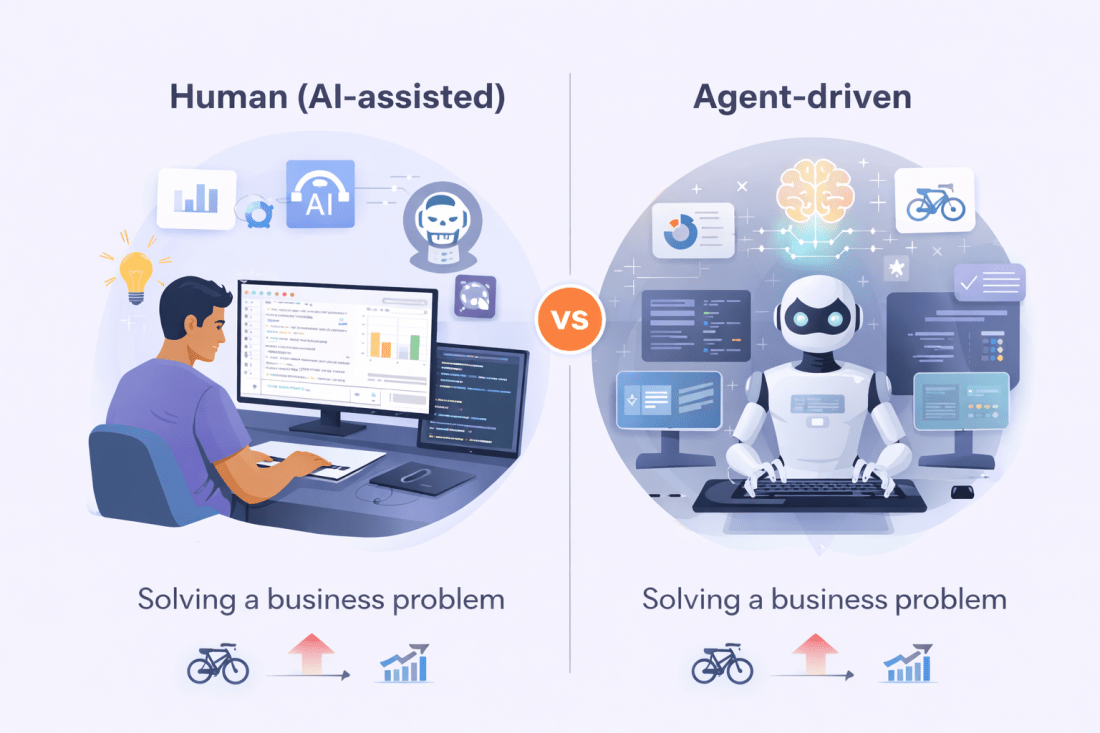

How to Think About AI Systems in 2026

Why GenAI, Predictive Models, and Agents Need to Work Together I’ve been working in AI since well before the GenAI wave, and one thing that has become almost annoying in the last couple of years is how quickly every problem gets thrown at an LLM—with the expectation that it will magically solve everything. It won’t. … Continue reading How to Think About AI Systems in 2026

Why Do Multi-Agent LLM Systems Fail? Insights from Recent Research

As GEN AI evolves, multi-agent systems (MASs) are gaining traction, yet many remain at the PoC stage. So this is where research papers and surveys help. Over the weekend, I explored their failure points and found a fascinating study worth sharing. MASs promise enhanced collaboration and problem-solving, but ensuring consistent performance gains over single-agent frameworks … Continue reading Why Do Multi-Agent LLM Systems Fail? Insights from Recent Research

Optimizing RAG Systems: A Deep Dive into Chunking Strategies.

When it comes to today’s AI systems, especially in Generative AI, the main challenge isn’t just building a basic system with, say, 70% accuracy—it’s about pushing that system to over 90% and making it reliable for real-world production. Optimizing RAG (Retrieval-Augmented Generation) systems is essential for reaching this level. Effective chunking, one of the foundational … Continue reading Optimizing RAG Systems: A Deep Dive into Chunking Strategies.

RAG Components – 10,000 ft Level

When you look at GEN AI and specifically LLM's from a usage point of view, we have a few techniques to interact with LLM's. This depends on whether you need to interact with external data or just use LLMs for your different tasks. RAG is an important technique and widely adopted because it helps you … Continue reading RAG Components – 10,000 ft Level