Why GenAI, Predictive Models, and Agents Need to Work Together

I’ve been working in AI since well before the GenAI wave, and one thing that has become almost annoying in the last couple of years is how quickly every problem gets thrown at an LLM—with the expectation that it will magically solve everything.

It won’t.

GenAI is powerful, but it’s not a magic pill. If there’s one post I wanted to write before the end of the year, it’s this one: how to think about building AI systems that actually solve real-world problems.

We’re moving from models that generate insights to systems that take actions. And that shift requires combining predictive models, business logic, and generative orchestration—rather than betting everything on a single model.

Step 1: Start With the Problem, Not the Model

Everything starts with a deceptively simple question: What kind of problem are we trying to solve?

We often hear statements like:

- “We want to predict churn.”

- “We need a chatbot for customer support.”

- “We want to summarize documents.s”

- “We want to find hidden patterns in data”

This step—properly framing the problem—is the most important one. Most AI failures happen here.

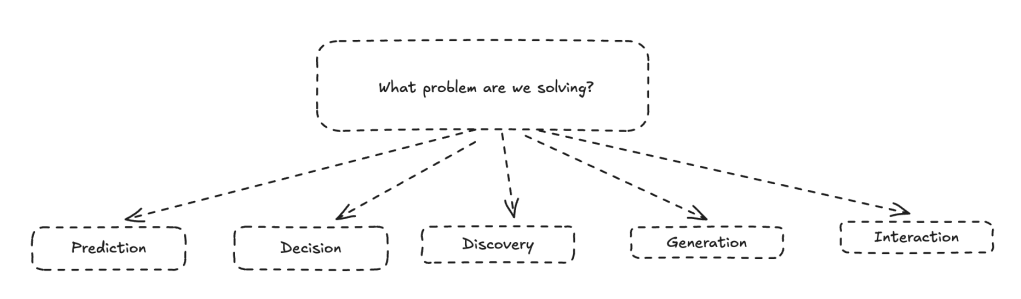

Five Core AI Task Types

A useful way to think about AI work is by grouping problems into five broad task types.

Let’s run through the five categories and the kinds of problems and models:

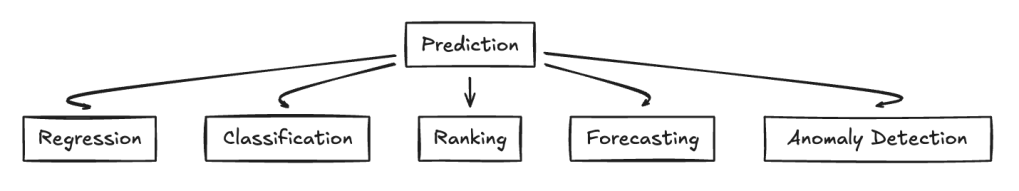

1. Prediction

Classic supervised learning problems. Given input data, predict an outcome.

Examples:

- Credit score → default risk

- Images → labels

Typical models include logistic regression, random forests, gradient-boosted trees, and neural networks. Predicting loan approval from financial features is a pure prediction task—we train on historical data and forecast outcomes for new applicants.

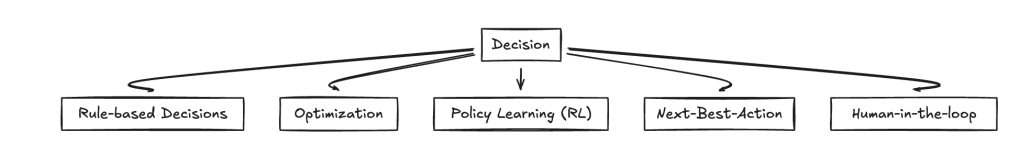

2. Decision

These problems answer: “What action should we take?”

This may involve:

- Rule-based systems

- Optimization logic

- Reinforcement learning

For example, approving or rejecting a loan based on risk thresholds is a decision task built on top of prediction.

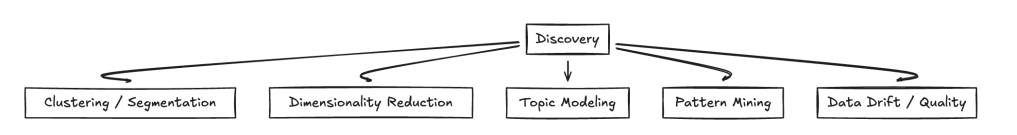

3. Discovery

Exploratory problems where we look for patterns, anomalies, or new structure in data.

Examples:

- Clustering

- Anomaly detection

- Topic modeling

In domains like drug discovery, generative models can explore chemical space, while predictive models evaluate candidates using experimental data.

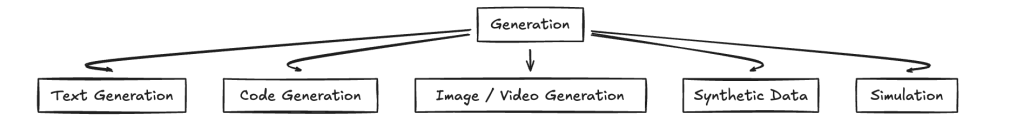

4. Generation

This is where GenAI shines. We create new content:

- Text

- Images

- Code

- Designs

Models include GANs, diffusion models, VAEs, and transformers like LLMs. Whenever we ask, “Write this” or “Generate that,” we’re solving a generation problem.

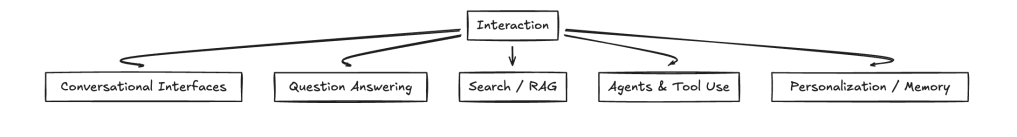

5. Interaction

These involve multi-turn engagement with users or environments:

- Chatbots

- Voice assistants

- Conversational recommender systems

LLMs excel here, but real interactive systems usually combine them with classifiers, retrieval systems, or predictive models behind the scenes.

Key takeaway

GenAI is not the optimal solution for all task types—and it was never meant to be.

Step 2: Reuse More Models, Build Fewer

One important shift we’re seeing is that teams are reusing models far more than building them from scratch.

Before starting a new training pipeline, it often makes sense to:

- Try a pre-trained model

- Fine-tune an existing SLM or Hugging Face model

- Evaluate performance quickly

Only if those fail should you invest in deeper model development.

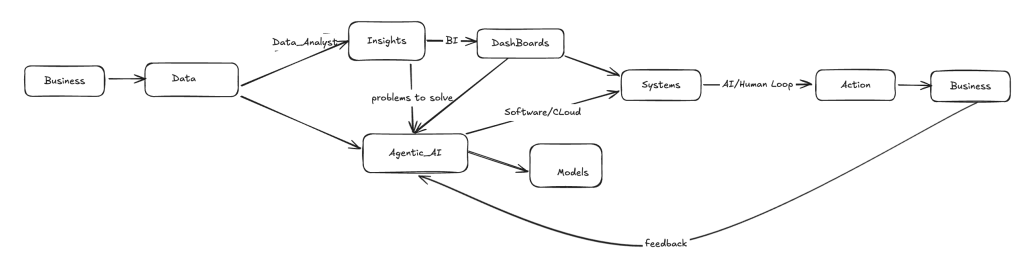

Step 3: Learn to Build Think of Hybrid Systems

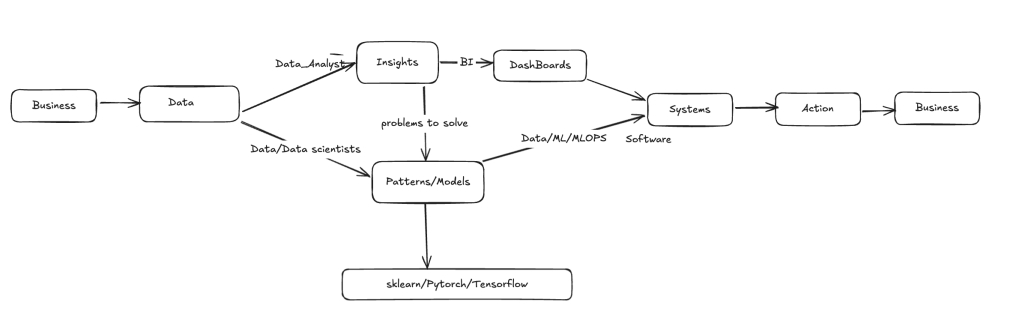

Historically, AI systems looked like this:

- Predictive models → insights

- Humans → decisions and actions

What’s changing now is not the models themselves, but how they’re used.

Today:

- Models become tools inside systems

- GenAI agents handle reasoning, orchestration, and coordination

We’re moving toward systems where agents take actions using models as building blocks—not the other way around.

GenAI excels in reasoning, language, and coordination.

Predictive models are better at:

- Accuracy

- Cost

- Latency

- Auditability

So we are naturally going to move towards building Hybrid Agentic systems. This is low agency in 2025, moving towards mid and high agency in 2026. Perhaps AGI later.

Lets take all of what we learnt and reimagine a classic ML system

Reimagine Fintech Loan Approval as an agentic system

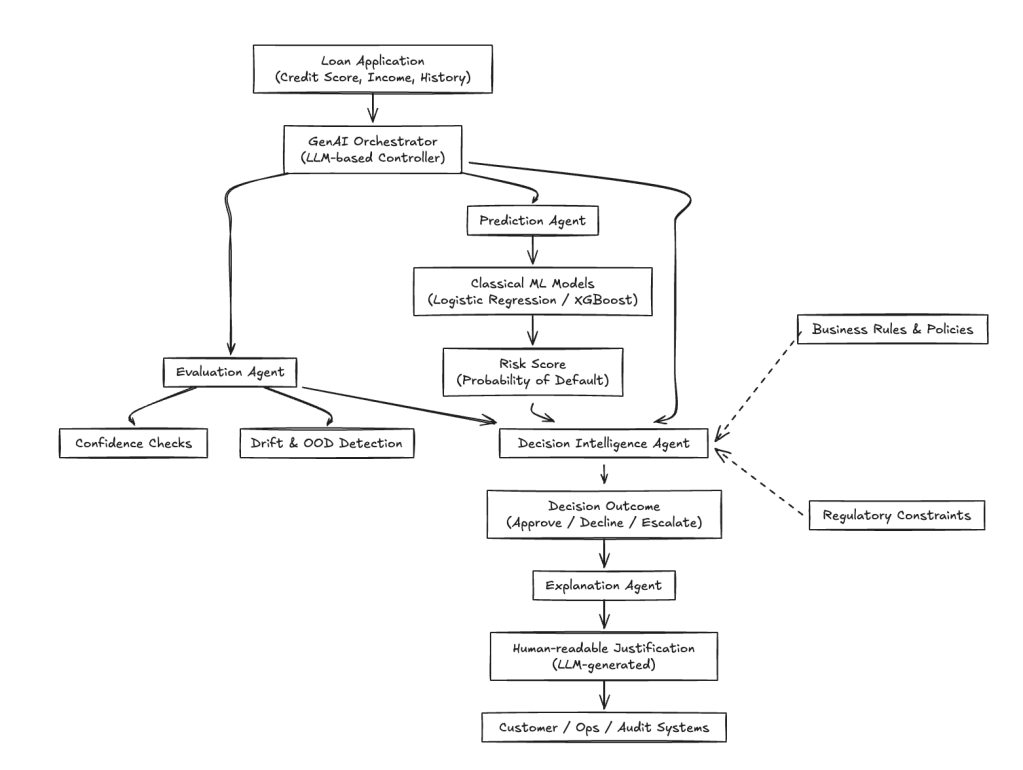

Let’s put this all together. Imagine a fintech building an automated loan approval system. The goal isn’t just prediction—it’s action.

A GenAI Orchestrator (an LLM-based controller) receives a loan application containing credit score, income, and credit history. It recognizes that the problem requires predictive scoring, policy enforcement, and explanation.

The task is then split across specialized agents: 1.) Prediction Agent 2.) Evaluation Agent 3.)Decision Intelligence Agent 4.)Explanation Agent

Lets see the flow in detail:

Prediction Agent

Invokes an existing credit-risk model (e.g., logistic regression or XGBoost) to estimate default risk. The model is not retrained—it’s used as a tool via an API.

Evaluation Agent

Monitors prediction confidence and checks for data drift or out-of-distribution inputs. If uncertainty is high, the case is flagged for review, ensuring robustness and compliance.

Decision Intelligence Agent

Applies business rules and regulatory constraints:

- Risk thresholds

- Loan-to-income limits

- Compliance checks

It combines policy logic with predictive outputs to approve, decline, or escalate.

Explanation Agent

Uses an LLM to translate the decision into a clear, human-readable justification:

- “Approved due to strong credit score and stable income.”

- “Declined due to high debt ratio under current policy.”

This layer is critical for transparency and trust.

Summary

We have seen the thought process of systems change. The core idea is simple: design AI systems around the problem, not the model.

By framing work through task types and combining predictive models with GenAI agents, we move from insights to real-world actions—without overusing LLMs where they don’t belong.

Conclusion

In 2026, successful AI Initiatives will not be defined by the size of their models, but by how well their systems are designed to handle scale, cost, uncertainty, and failure. Generative models will play an important role, but only when grounded by retrieval, constrained by decision logic, evaluated continuously, and embedded within systems that degrade gracefully.